What if you could decode the logic behind any piece of content? Imagine looking at an AI-generated text and knowing exactly what prompt created it. This is the power of Reverse Prompt Engineering (RPE), which is the process of analyzing an AI-generated output to infer the prompt that could have produced it. This process is more than an academic exercise; it's a powerful technique that enhances prompt design, deepens our understanding of AI behavior, and helps us deconstruct and improve technical content. By analyzing existing content, whether it was written by a human or AI, we can deduce its purpose, target audience, and underlying assumptions. RPE can also help us learn best practices, compare output from different AI models, diagnose why a piece of content is unclear, anticipate user needs based on the technical context, and ultimately, sharpen our own prompt design skills.

Prompt Engineering vs. Reverse Prompt Engineering

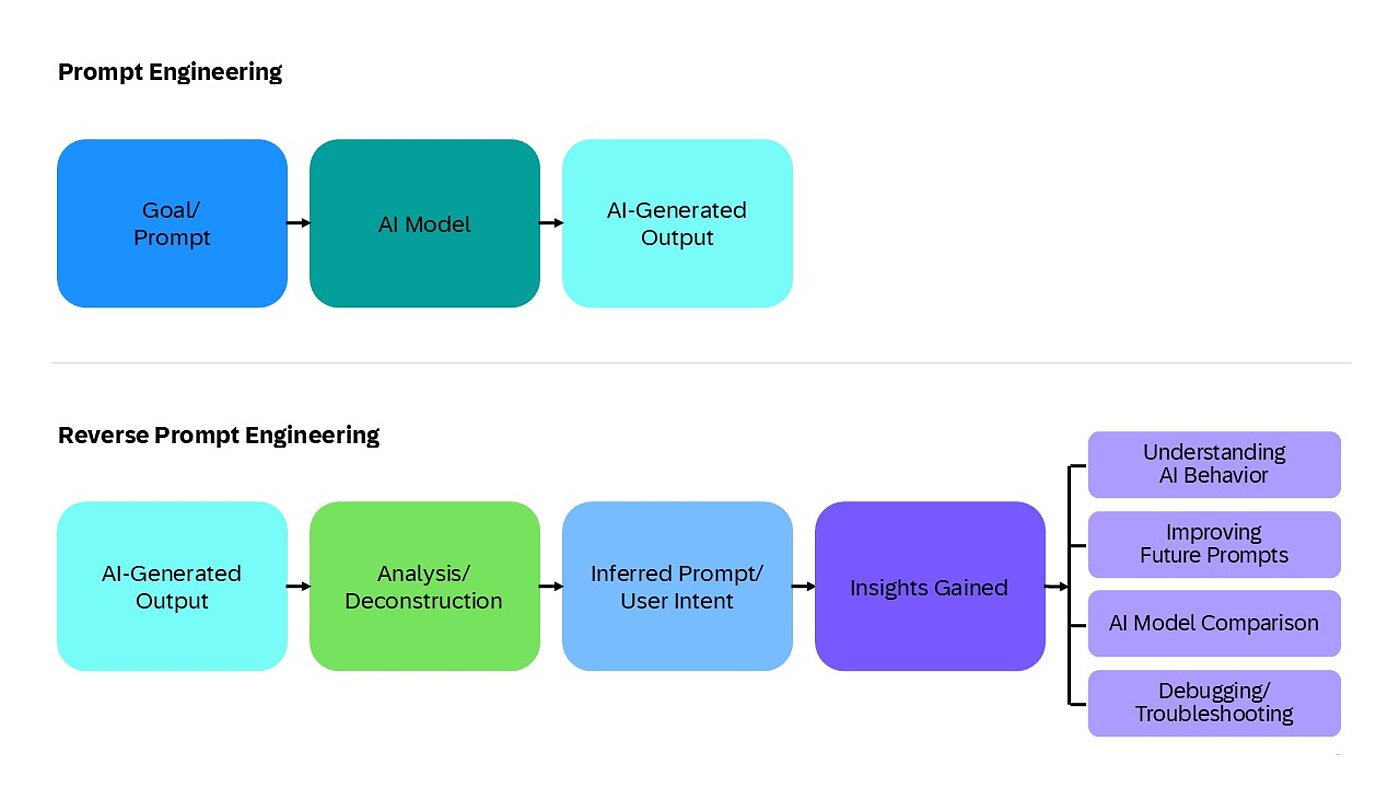

While standard prompt engineering is about construction (building a prompt to get a desired output), RPE is about deconstruction (analyzing an output to understand its origins). The two are complementary sides of the same coin. The table below outlines the key differences.

Aspect | Prompt Engineering | Reverse Prompt Engineering |

Starting Point | A goal or task you want the AI to perform | An existing AI-generated output |

Objective | Design a prompt to get a desired result | Infer the prompt or reasoning behind a given result |

Use Case | Generating content, solving problems, guiding AI | Debugging, learning, analyzing, and improving prompt quality |

Mindset | Constructive: "What should I ask?" | Analytical: "What was likely asked?" |

Example | "Write a summary of this article in plain English." | "Given this summary, what was the original prompt?" |

Practical applications: Deconstructing content with RPE

RPE shines when applied to the kind of content technical communicators work with daily. The following scenarios illustrate how RPE can be applied across creative, technical, and structured content to uncover the logic behind AI-generated outputs.

Example 1: Creative writing (plot deduction)

Even in creative contexts, RPE helps uncover the building blocks of a narrative.

Given output (story excerpt):

"The old, rusty clock tower in Neolumenreach finally struck midnight, and with its final chime, the city's power grid failed. Amidst the chaos, a lone figure in a tattered trench coat, illuminated only by the emergency glow-strips, found a cryptic message etched into the base of a forgotten statue: 'The truth is in the sound of silence.'"

RPE prompt:

"Based on this story excerpt, what plot points, character motivations, or preceding events might have led to this scene? What genre elements are present, and what prompt could have generated an excerpt in this style?"

Potential AI analysis:

- Plot points: The analysis would suggest a plot involving a widespread technological failure, hinting at sabotage. The cryptic message points towards a central mystery.

- Character motivations: The “lone figure” is likely a protagonist who was anticipating this event, driven by a search for truth or justice.

- Genre elements: The output contains strong elements of cyberpunk (futuristic city, tech failure) and noir/mystery (lone detective figure, cryptic clues).

- Inferred prompt: A likely prompt would be: "Write a cyberpunk-noir story excerpt set in a crumbling futuristic city. The scene should take place at midnight during a major system failure. The protagonist discovers a cryptic message hinting at a larger conspiracy."

Example 2: Deducing the audience from a user manual

While creative writing offers a rich space for narrative deduction, RPE is equally powerful in technical contexts. This example shows how RPE can analyze a procedural snippet to understand its intended user.

Given output (user manual excerpt):

“Section 3.2: Calibrating the XYZ Sensor

To ensure accurate readings, the XYZ sensor must be calibrated after initial installation and every 500 operating hours.

- Connect the calibration jig to Port A.

- Navigate to the 'Settings' menu on the control panel.

- Select 'Sensor Calibration' and then 'XYZ Sensor.'

- Press and hold the 'Calibrate' button for 10 seconds, until the status LED turns solid green.

- Confirm calibration on the control panel.”

RPE prompt:

"Given this manual excerpt, infer the target audience, the section's purpose, and the assumed technical knowledge. What kind of product is this?"

Potential AI analysis:

- Likely target audience: Field technicians or maintenance personnel. The instructions are specific, hands-on, and involve specialized tools (“calibration jig”), which a general consumer would not have.

- Primary purpose: To provide clear, sequential instructions for a critical maintenance procedure needed to ensure the device operates accurately.

- Level of technical knowledge assumed: A moderate level. The user is expected to understand terms such as “Port A,” “status LED,” and “control panel” without further explanation.

- Kind of product/system: An industrial or professional-grade device, such as a manufacturing machine or scientific instrument, that includes a sensor requiring periodic recalibration.

Example 3: Analyzing a glossary for critical context

Beyond procedural content, RPE can also help unpack the significance of terminology in technical glossaries, for example, revealing much about a product's risks and priorities.

Given output (glossary entry):

“Thermal Runaway: A dangerous, self-accelerating rise in temperature that can lead to destructive outcomes. In battery systems, an internal fault may raise the temperature in one area, triggering exothermic reactions that spiral out of control. This chain reaction can ignite fires or cause explosions.”

RPE prompt:

"Based on this glossary definition of 'Thermal Runaway', what type of documentation is it from? Why is this term included, and what does it imply about the product?"

Potential AI analysis:

- Type of technical documentation: The emphasis on "destructive outcomes," "fires," and "explosions" strongly suggests this is from a safety manual, a product safety data sheet (SDS), or a design specification for a high-risk system.

- Significance: This term is included because “Thermal Runaway” represents a significant and potentially catastrophic failure mode. Its inclusion highlights a critical risk that must be understood for safe operation and maintenance.

- Most likely product/technology: Battery systems, especially lithium-ion batteries used in electric vehicles, consumer electronics, or large-scale energy storage.

- Potential risks: The term’s presence signals that the product carries an inherent risk of overheating and fire if not designed, handled, or maintained correctly. This implies that thermal management and safety protocols are paramount.

Example 4: Inferring system architecture from an API reference

Even structured API documentation can be reverse-engineered to understand a system's design.

Given output (API reference snippet):

POST /api/v1/users/{userId}/orders |

Creates a new order for a specific user.

Request body:

JSON { "items": [ {"productId": "string", "quantity": "integer"} ], "shippingAddress": "string", "paymentMethodId": "string" } |

Responses:

- 201 Created - The order was created successfully.

- 400 Bad Request - The request was invalid (for example, missing required fields).

- 401 Unauthorized - Authentication failed or user is not authorized.

- 404 Not Found - The specified user does not exist.

RPE prompt:

"From this API snippet, what kind of application is this for? What are its key entities and security model? What are the implicit dependencies?"

Potential AI analysis:

- Kind of application: An e-commerce platform or any online service that facilitates ordering products

- Key entities/resources: The system clearly manages Users, Orders, Products, Payment Methods, and Addresses.

- System's security model: The 401 Unauthorized response indicates that the API requires authentication. Users must be logged in or provide a valid token.

- Implicit dependencies: The API structure reveals key relationships: an Order is tied to a User; an Order consists of Products; and an Order requires both a shippingAddress and a paymentMethodId. This suggests separate but interconnected services or modules.

RPE in the broader AI ecosystem

RPE doesn’t exist in a vacuum; it enhances several other advanced prompting strategies. By understanding how RPE connects to these adjacent concepts, you can build a more holistic and powerful approach to working with AI systems:

- Chain-of-thought prompting: When an AI output shows multi-step reasoning, RPE can dissect that logic. By analyzing how a conclusion was reached, you can reverse-engineer the "chain of thought" and learn how to structure future prompts for complex problem-solving or decision-making processes.

- Context engineering: Every prompt carries implicit context like domain knowledge, tone, or user intent. RPE helps uncover what contextual cues were likely present in the original prompt, allowing you to design a more effective context for future interactions. This is particularly valuable when working with long-form generation or multi-turn interactions.

- Retrieval-Augmented Generation (RAG): In RAG systems, RPE helps determine if the retrieved documents were used effectively. By analyzing the output, you can infer which parts of the retrieved information were most influential, or if the model ignored the context entirely. This insight can guide improvements in retrieval strategies or prompt design.

Together, these connections position RPE as a foundational skill that enhances not just prompt writing, but also model interpretability, system design, and user experience.

Challenges and limitations

While powerful, RPE has its limits. Like any interpretive method, it has constraints that can affect accuracy and reliability.

- Subjectivity and multiple interpretations: There is rarely a single "correct" prompt for an output. Multiple prompts with different wording can yield similar results, making RPE inherently subjective.

Example: A summary of a news article could be generated by prompts like “Summarize this article in plain English” or “Give me a concise overview of this article’s main points.” Both are valid, but subtly different. - Ambiguity in outputs: If an AI output is vague or generic (such as "Teamwork is important"), it’s nearly impossible to deduce a specific, meaningful prompt.

Example: If an AI generates a sentence like “Innovation is key to success,” it’s nearly impossible to determine whether the original prompt was about business strategy, motivational quotes, or startup culture. - Loss of context: RPE operates without access to the full system context, such as temperature settings, system prompts, user history, or retrieved documents in RAG systems. This limits the accuracy of any inference.

Example: A helpful AI response might rely on a retrieved document or a prior user message. Without that context, RPE can only guess what influenced the output. - Bias detection without root cause: RPE can surface biases in outputs (for example, gendered language), but it may not reveal whether the bias originated from the prompt, the training data, or the model's internal reasoning.

Example: If an AI consistently associates certain professions with specific genders, RPE might identify the pattern but not explain whether it was prompted or learned. - Limited use for non-text outputs: While possible, RPE is most effective with natural language. Inferring the prompt behind, for example, a generated image or complex code snippet is far more speculative and requires deep domain expertise and assumptions about the model’s capabilities.

RPE acts like a diagnostic tool; it helps you infer the structure and intent behind an AI output, but it doesn’t expose the full prompt logic or system parameters that influenced the result.

RPE checklist

Use the following checklist as a starting point for your own RPE prompts:

![]() What is the format of the output? (for example, manual, glossary, API, procedure)

What is the format of the output? (for example, manual, glossary, API, procedure)![]() Who is the intended audience?

Who is the intended audience?![]() What assumptions does the content make?

What assumptions does the content make?![]() What tone or style is used?

What tone or style is used?![]() What domain knowledge is implied?

What domain knowledge is implied?![]() What prompt could have led to this output?

What prompt could have led to this output?![]() Are there alternative prompts that might yield similar results?

Are there alternative prompts that might yield similar results?

Conclusion

Reverse Prompt Engineering is a vital skill for modern technical communicators. It moves beyond simply generating content to a deeper understanding of how AI interprets instructions and constructs meaning. By learning to "think backward" from an output to a potential prompt, you can diagnose content issues, learn from existing examples, improve documentation quality, and ultimately write more effective prompts yourself. As AI becomes further integrated into our workflows, this analytical skill will be essential for anyone looking to not just use AI but to master it, ensuring content is always clear, purposeful, and precise in an AI-driven world.

Further reading

- Learn Prompting Org: Reverse Prompt Engineering

A clear, step-by-step guide that explains how RPE works using only model outputs, optimization strategies, and practical examples. - Springs Apps: Comprehensive Guide to Reverse Prompt Engineering

This guide covers how RPE is used in brand generation, chatbot tuning, and AI auditing, with insights for both beginners and advanced users. - HogoNext: 10 ChatGPT Prompts for Reverse Engineering

A practical list of prompts designed to help you analyze and reverse-engineer AI-generated content and improve your understanding of how prompts shape outputs.