Artificial intelligence has moved from experimental technology to production infrastructure. For technical communicators, Large Language Models (LLMs) are now embedded in documentation systems, support workflows, and knowledge management platforms. Early AI adoption focused on crafting effective prompts – the right words, examples, and formatting – to elicit useful responses. But as enterprise use cases have grown more complex, a fundamental limitation has emerged: Prompts alone cannot sustain reliable, scalable AI systems.

The solution is context engineering, a discipline that manages the complete information environment surrounding an AI model, not just the immediate instruction. For organizations with large-scale documentation needs, context engineering is becoming essential to deliver consistent, accurate, and adaptive AI solutions across users, tasks, and time.

From prompts to context: Understanding the shift

While prompt engineering focuses on crafting the perfect request, context engineering focuses on constructing the perfect knowledge state before the request is even made. This distinction matters because enterprise AI systems must operate across multiple sessions, remember user preferences, retrieve domain-specific knowledge, integrate with external tools, and coordinate multi-step workflows. These are requirements that extend far beyond a single well-crafted prompt.

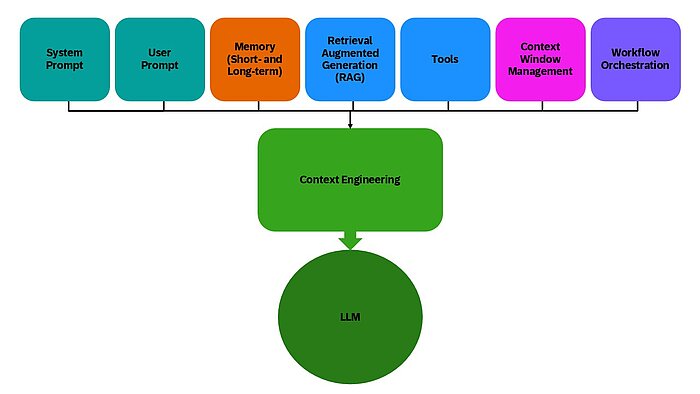

Context engineering treats AI not as a conversational partner but as a system component requiring governance, versioning, and continuous maintenance. The discipline combines prompts with Retrieval Augmented Generation (RAG), memory management, system instructions, tool integration, context window optimization, and workflow orchestration.

| Feature | Prompt engineering | Context engineering |

| Scope | Instructions (simple or complex) | Complete information environment |

| Purpose | Specific response | Consistent performance over time |

| Longevity | One-off or shorter tasks | Long-running systems and agents |

| Methods | Wording, examples, formatting | RAG, memory, system prompts, tools, orchestration |

| Scalability | Manual adaptation | Designed for reuse across users and tasks |

For technical communicators, this shift enables documentation systems that remember user history, adapt explanations to expertise levels, and maintain consistency across large content ecosystems.

Core methods: Building context-driven workflows

Retrieval Augmented Generation (RAG)

RAG connects AI to external knowledge sources such as product manuals, support databases, and internal wikis. This ensures responses reflect current, accurate information rather than static training data. For documentation teams, this means AI assistants can answer user questions by retrieving the latest product specifications or troubleshooting procedures in real time.

Memory management

Memory systems track conversation history, user preferences, and project-specific context across sessions. A documentation assistant can remember that a particular team prefers British English spelling, uses specific terminology conventions, or has completed certain content modules, eliminating repetitive setup and maintaining continuity.

System prompts

Unlike user-facing prompts, system prompts establish foundational behavior. This means the role, tone, constraints, and escalation rules that persist throughout interactions. A technical communication assistant might operate under a system prompt specifying "clear, concise language for non-technical audiences; avoid jargon; provide step-by-step instructions; cite source documentation."

Tool integration

Context-engineered systems can invoke external tools: querying databases, updating content management systems, checking translation memory, or triggering review workflows. This transforms AI from a writing aid into an active participant in the documentation pipeline.

Context window optimization

LLMs have token limits that constrain how much information they can process at once. Context engineering employs summarization, chunking, and prioritization strategies to fit essential information within these constraints while preserving meaning. This is critical for technical documentation that often includes lengthy specifications.

Workflow orchestration

Complex documentation projects require coordinated steps: research, drafting, review, localization, and publishing. Orchestration sequences these activities, passing context forward at each stage and adapting instructions based on intermediate results.

Examples of context engineering in action

Now that the foundational methods of context engineering have been outlined, let’s consider some examples of how context engineering can be applied in AI systems:

Writing assistant

A documentation team implements a context-engineered writing assistant for product user guides. The system integrates:

- RAG to retrieve product specifications, API documentation, and support FAQs from internal repositories

- Memory to track the team's style guide preferences, approved terminology, and feedback from previous releases

- System prompts defining audience, tone, and structure

- Workflow orchestration guiding technical communicators from outline to draft to technical review, with context adjusted at each stage.

Results could include, for example, faster draft completion, measurably improved consistency across documents, and higher user satisfaction scores for documentation clarity. The assistant doesn’t replace the technical communicators; it elevates their capacity to focus on complex explanations and architectural decisions while automating retrieval, formatting, and first-draft generation.

AI customer support agent

This is a broader application of context engineering that enables AI systems to move from generic helpers to specialized, reliable team members.

A generic prompt like, "Please describe your issue," puts the burden on the user and relies on the model's general knowledge. A context-engineered agent, however, operates within a rich information environment:

- Memory stores the user’s ticket history and product preferences

- RAG pulls relevant troubleshooting steps, product manuals, and known issues from a real-time knowledge base

- A system prompt defines the agent's role, tone, and escalation policies (for example, "You are a helpful support agent for our software. Always verify subscription status before offering advanced help. Escalate to a human if the user expresses high frustration.")

- Tool use allows the agent to call APIs to check subscription status or create a new support ticket

- Workflow orchestration guides the interaction from issue classification to context retrieval, response generation, and finally, a follow-up to confirm resolution and update the user's memory profile

Context engineering elevates prompt engineering

Context engineering does not replace prompt engineering; it elevates it. Techniques like Chain-of-Thought (CoT) prompting that guides step-by-step reasoning or Tree-of-Thought (ToT), which explores multiple solution paths, remain critical for complex tasks. The difference is that these prompts now operate within a rich contextual environment rather than in isolation.

For technical communicators, this means mastering both: prompt engineering provides precise task instructions, while context engineering ensures the model has the right knowledge, tools, and memory to execute reliably.

Where can you use context engineering?

Context engineering is applicable wherever AI systems are expected to operate reliably, adaptively, and at scale. Examples of key domains include, but are not limited to:

- Writing and content generation: Maintaining tone, style, and structure across long-form documents or collaborative writing sessions.

- Enterprise knowledge assistants: Integrating internal documents, tools, and workflows to support employees with accurate, contextual answers.

- Education and tutoring: Personalizing learning experiences by tracking student progress, adapting explanations, and managing long-term learning goals.

- Customer support automation: Building AI agents that remember user history, retrieve relevant documentation, and escalate appropriately.

- Software development agents: Orchestrating multi-step tasks like code generation, testing, and documentation using memory and tool integration.

- Legal and compliance tools: Ensuring consistent interpretation of regulations and case history across complex workflows.

- Healthcare assistants: Managing patient history, clinical guidelines, and tool integrations for safe and context-aware decision support.

In short, if the AI system needs to remember, reason, or coordinate, context engineering is likely essential.

Challenges and governance considerations

Before implementing context engineering, some concerns need to be addressed:

- Data security: Ensuring confidential documentation remains protected within RAG systems and that access controls mirror existing information governance.

- Context window limits: Managing the tradeoff between comprehensive context and token constraints.

- Integration complexity: Connecting AI systems with legacy content management platforms and workflow tools.

- Quality assurance: Validating that retrieved information is current, accurate, and appropriate for the task.

- Change management: Training documentation teams on new skills, like semantic search, memory structure design, and workflow orchestration.

Evolving competencies

As AI becomes infrastructure, context engineering can expand the technical communicator's role into that of an "AI content architect". Key competencies could include:

- Information retrieval design: Moving beyond keyword search to understand and design semantic retrieval systems. This means structuring source content so it can be effectively found and used by RAG systems.

- System prompt and persona crafting: Defining the core identity, tone, and behavioral rules for AI assistants, encoding the organization's voice and brand into the model's foundational instructions.

- Memory and knowledge graph management: Curating the structured data that forms an AI's long-term memory, ensuring that institutional knowledge is accurate, current, and accessible.

- Workflow orchestration and logic: Designing the multi-step logic that AI agents follow, defining how context is passed between stages of a documentation or support process.

By developing these skills, technical communicators can position themselves not just as content creators but as architects of intelligent documentation systems – a strategic capability as AI becomes infrastructure.

Implementation roadmap for documentation teams

Context engineering can be adopted through a structured approach:

Phase 1: Audit and map

Review existing knowledge sources (documentation, wikis, support tickets), identify integration points with content management systems, and document current workflows where AI could add value.

Phase 2: Define governance

Establish access controls for sensitive content, compliance requirements for RAG sources, and quality verification processes to catch errors or hallucinations.

Phase 3: Pilot narrow use cases

Start with focused applications like FAQ generation, terminology consistency checking, or first-draft creation for repetitive documentation types. Define success metrics: accuracy, time savings, and user satisfaction.

Phase 4: Scale and iterate

Expand successful pilots to broader workflows, integrate feedback loops for continuous improvement, and build organizational capabilities around context design and maintenance.

Future directions

Context engineering is evolving alongside AI capabilities like:

- Autonomous optimization: Systems that self-tune context based on usage patterns and outcomes

- Multimodal integration: Context spanning text, diagrams, video, and augmented reality interfaces

- Predictive retrieval: Anticipating what information users will need before they ask

- Cross-system orchestration: Context that flows seamlessly across documentation, support, learning, and product interfaces

As these advances materialize, context engineering will most likely emerge as a distinct professional discipline – one where technical communicators' expertise in information architecture, audience analysis, and structured content positions them as natural leaders.

Conclusion

Context engineering represents a fundamental shift in how enterprises deploy AI, moving from isolated prompt experiments to systematically designed information environments. For technical communicators, this shift offers both opportunity and responsibility: the opportunity to build documentation systems that are genuinely adaptive and intelligent, and the responsibility to ensure those systems remain accurate, secure, and aligned with user needs.

The future of enterprise AI isn't just about asking better questions; it's about architecting the knowledge foundations that make intelligent answers possible.

- Context Engineering Guide | Prompt Engineering Guide

- Walden Yan: Cognition | Don’t Build Multi-Agents

- Context Engineering vs Prompt Engineering: A Guide to AI Interaction - Unite.AI

- David Kimai: GitHub - Context-Engineering

- Context Engineering: Going Beyond Prompt Engineering and RAG - The New Stack

- Context Engineering for Enterprise AI Solutions - IWConnect

- Effective Context Engineering for AI Agents - Anthropic

- Context Engineering: A Framework for Enterprise AI Operations- Architecture and Governance

- 5 Technical Documentation Trends to Shape Your 2025 Strategy - Fluid Topics

- McKinsey Technology Trends Outlook 2025