Artificial Intelligence(AI) has come a very long way since computer scientist Alan Turing raised the question, “Can a machine think?” in the 1950s. The further we have progressed in unlocking AI’s possibilities (the “can”), the more looming the need to establish AI ethics (the “ought to”) has become. Now that we can create AI, should we?

For decades, futurists have predicted “the singularity”, a point at which AI wholly controls the world, leaving humanity as far behind as Homo sapiens has left lions and ants. Popular fiction has provided us with a glimpse into a world where AI is as intelligent or even more intelligent than humans. Tales such as Terminator (1986) foresee a world in which AI starts a nuclear war, destroying itself and civilization.

These remain fantastic scenarios. Yet today, forms of AI are used throughout industries and have taken their place in our everyday lives. We may not have the Terminator’s AI Skynet, but we do have Alexa. AI is in refrigerators, dishwashers, call centers, and vacuum cleaners. Less dramatic than their fictional counterparts, these mundane devices (and their descendants) already raise ethical questions.

Is there a point at which we need to consider AI a person? And if so, are we morally obliged to it, as we are to people (and indeed animals)? Does AI have rights like we do, or are we only as bound to it as we are to a particularly sophisticated toaster?

As humans surrounded by AI, how do we know that we can trust these devices? As developers of AI, how do we ensure we design devices that can be trusted?

How do we ensure we have trustworthy AI?

Trustworthy AI

In 2020, an independent high-level research group on AI ethics presented a set of guidelines for the ethical development and use of AI to the European Commission (EC). According to the EC’s Ethics Guidelines on Trustworthy Artificial Intelligence (henceforth, Ethics Guidelines), an AI is worthy of trust (trustworthy AI) if it displays three qualities: It is lawful, ethical,and robust(Ethics Guidelines, p. 2).

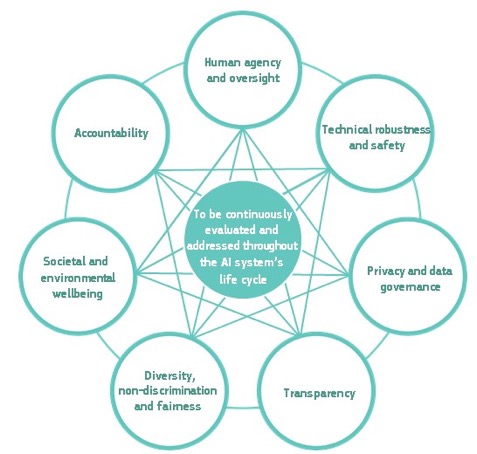

The guidelines then outline and explain the last two qualities: For AI to be ethical and robust, i.e. to be trustworthy, the AI’s lifecycle – its development, its deployment, and its dissemination of information about it – must meet seven main requirements:

- Human agency and oversight

- Technical robustness and safety

- Privacy and data governance

- Transparency

- Diversity, non-discrimination, and fairness

- Societal and environmental wellbeing

- Accountability

All the requirements are of equal importance, support each other, and should be implemented and evaluated throughout the AI system’s lifecycle. If an AI lifecycle does not meet these requirements, it cannot be considered trustworthy (and ethical) AI.

Most of the requirements relate to the design and development of AI-enabled devices. However, one requirement – transparency – impacts the documentation of AI. So, how might transparency be relevant to technical writing for AI?

Figure 1: Interrelationship of the seven requirements for Trustworthy AI

Source: Ethics Guidelines, p. 17

Transparency

At the heart of the transparency requirement is information about the AI for developers, end users, and other stakeholders. It demands careful documentation and particular attention to the specifics of the audience.

According to the Ethics Guidelines, the transparency requirement is wide-ranging, concerning all the “data, system, and business models” around AI. It is closely linked to a principle ofexplicability, that AI processes need “the capabilities and purpose of AI systems openly communicated, and decisions – to the extent possible – explainable to those directly and indirectly affected.” (Ethics Guidelines, p. 15). This is to enable humans to intervene in the AI’s decisions if needed. They cannot do so if those decisions are inexplicable.

At my talk at the tcworld conference in November 2022, I was asked about “black box” algorithms, where even AI developers themselves cannot explain how they work. According to the Ethics Guidelines, these cases require special attention: “Other explicability measures (e.g. traceability, auditability and transparent communication on system capabilities) may be required, provided that the system as a whole respects fundamental rights.”

So, in general, transparency (and thus trustworthy AI) requires technical documentation to make explicit the various elements of AI. The guidelines go into more detail on specific aspects of transparency: traceability, explainability, and communication. So, do these aspects require work by technical writers?

- Tracability: For AI to be trustworthy, its elements must be traceable, enabling the identification of “reasons why an AI-decision was erroneous which, in turn, could help prevent future mistakes” (p. 18). Traceability requires various elements of the AI to be “documented to the best possible standard”. Such elements include data sets, processes yielding AI system decisions, data gathering and data labeling, algorithms used, and the AI system decisions themselves. This documentation also helps explain and audit the AI.

- Explainability:It must be possible to explain both the technical processes of an AI system and related human decisions (p. 18). The explanations should be “of the degree to which an AI system influences and shapes the organizational decision-making process, design choices of the system, and the rationale for deploying it.”

Indeed, not only must such an explanation be possible but, if an AI system has “a significant impact on people’s lives,” stakeholders should be able to demand an explanation of the AI system’s decision-making process – an explanation adapted to that stakeholder’s expertise, be it a layperson, regulator, or researcher. - Communication: AI systems must not represent themselves as human. “Users have a right to be informed that they are interacting with AI.” Furthermore, where needed to “ensure compliance with fundamental rights,” there should be an option for users to avoid interacting with AI and engage with humans instead. Finally, the system’s capabilities and limitations should be communicated appropriately to stakeholders.

The first two aspects of transparency – traceability, and explainability – obviously involve technical documentation. There must be documentation to enable easy tracing of an AI system’s decision-making and explaining, for example, why it was deployed in a particular environment.

The last aspect – communication – has less of a need for technical documentation. How an AI communicates its nature, alternatives to it, its capabilities, and limitations may require some documentation, but it may also be enough that the AI communicates such details itself. Unless technical writers author the actual speech of the AI, it is not obvious that they must be brought in for such tasks.

The path ahead

This outline of what is relevant to technical writers in the Ethics Guidelines is only an initial investigation into the role of technical writers in the development and deployment of trustworthy AI. By no means does it claim to be complete.

Here are a few of the questions that arose from my talk at the tcworld conference.

What role do tech writers have in ensuring the guidelines are part of AI development?

The Ethics Guidelines are only guidelines (albeit for ethical behavior), not rules or laws. A legal team need not cover them on the project (although they do the third component of trustworthy AI, namely the legal part). If the Ethics Guidelines are covered by any specialist team, it is one focused on ethics. Technical writers do not focus on ethics (at least, no more than any other role in technological projects).

However, technical writers might play a part in pointing out that an AI project includes ethical guidelines. When I start on a documentation project for a client, I expect a style guide to be in place. In the unlikely event that there isn’t a style guide, I would raise the need for one, discuss desired styles, and perhaps even help the client work out which style guide might be most suitable to implement or adapt. Similarly, technical writers working on an AI project might soon expect it to come with an ethics guide. If there isn’t one, they could raise the need for one and suggest that an ethics guide such as the EC’s Ethical Guidelines be implemented or adapted.

Do we need ethical guidelines for technical writers who use AI?

Some technical writers increasingly use AI to do their work. So, can the Ethics Guidelines be applied to the work of technical writing itself? The guidelines are meant not only for development, but also deployment, and concern the relationship between AI, its implementers, and its stakeholders. Such deployment could include technical writers using AI, and its stakeholders could include the technical writer’s clients.

While discussing this question at the tcworld conference, it was suggested that if a technical writer produced documentation through an AI, and not a human, they should a) inform their client of this and b) be ready to offer alternative human-produced documentation if the client requests it.

One commentator noted that we do not ask technical writers to do this with other technology, e.g., we do not require technical writers to inform clients that we are using Oxygen and not Microsoft Word to code DITA; nor do we offer the alternative of hand-coded DITA (presumably, in Notepad – or perhaps, on printed paper that the client can scan).

While this is certainly true, AI significantly alters the relationship between the author, the authoring technology, and the documentation. AI imitates what technical writers do. It doesn’t merely aid the writer; it may take on some of the decisions they typically make as part of their role. This raises the question of responsibility for the resulting documentation, especially when things go wrong. It matters whether AI or a human is responsible for a particular mistake. A flaw in documentation due to a defective AI code is significantly different from the identical flaw that was caused by a human author going through personal issues.

Another commentator raised the point that, in some situations, AI is (or perceived to be) more trustworthy than a typical human. For example, when gathering medical data for insurance purposes, some demographics of users can be wary about the prejudices of certain human agents gathering data from them. In this situation, they may be more comfortable with an AI agent. In this scenario, the AI is perceived as more trustworthy than a human.

This situation is relevant because it indicates the importance of the other side of transparent communication. Trustworthy AI identifies itself as such, never masquerades as a human, and offers alternatives to interact with it. However, humans may also learn something from AI. As AI increasingly takes the stage, a trustworthy human may need to identify themselves as human – and, in some cases, offer AI as an alternative.

Again, these are only initial thoughts on ethical questions regarding AI and its relevance to documentation. From the discussions at the tcworld conference, I expect them to develop significantly. Yet, even at this stage, it is clear that technical writing has a role in the development of ethical, trustworthy Artificial Intelligence.

AcknowledgementMany thanks to my colleagues and friends at Technically Write IT for feedback on early drafts of this talk, as well as support and encouragement up to and on the day. Thanks also to the audience at the talk and their thought-provoking questions, and finally, to the organizers of the tcworld conference and this magazine for the opportunity to present these ideas. |

Further information

- European Commission (EC) Guideline document

- Technically Write IT (TWi)

- Turing, A. 1950. “Computing Machinery and Intelligence”, Mind, 59. (Alan Turing’s seminal paper where he raised the question “Can a machine think?”)