Generative Artificial Intelligence (GenAI) is rapidly transforming the way we work, learn, and innovate. For many, however, AI can seem daunting. At SAP, we've found a dynamic solution to empower our teams: the promptathon. This collaborative learning experience is an invaluable tool to explore GenAI and master the art of prompting. This article shares our insights into the promptathon concept and how we adapted it to improve AI literacy.

The genesis of promptathons: Learning by doing

AI expert Professor Ethan Mollick points out that we need to actively engage with AI in order to understand its potential. We don’t have to be AI experts, but being proficient in creating prompts is important, as the quality of input affects the output. Mastering AI can improve efficiency, foster creativity, and offer a competitive advantage. It was this way of looking at generative AI that led to the concept of a promptathon – learning by doing.

What exactly is a promptathon?

A promptathon is a collaborative learning experience designed to deepen understanding of generative AI while fostering mutual support. Small groups tackle specific challenges, refining prompt-writing skills and integrating AI into daily workflows, while also cultivating stronger team collaboration.

The concept originated from events like the University of Hamburg's 2023 exploration of ChatGPT, where participants developed creative prompts for predefined tasks. Deutsche Telekom hosted one of the first corporate promptathons in September 2023. Inspired by a successful Corporate Learning Community (CLC) promptathon in April 2024, we introduced this dynamic format to our organization, SAP, in mid-2024. Since then, we have been hosting a series of promptathons tailored to different audiences.

Our goal was to create an adaptable learning experience that accommodates diverse experience levels, introducing beginners to generative AI and encouraging engagement. The promptathon format proved to be perfectly suited.

Leveraging design thinking, we adapted the CLC model to the needs of our company. We streamlined events to a maximum of two hours to enable broader participation. We used MURAL as our collaborative whiteboard and integrated company-approved AI tools, allowing us to adhere to data protection standards.

We brainstormed challenges relevant to diverse roles, covering topics like blog post creation, summarization, task prioritization, idea generation, and business simulations. To ensure consistency, we developed a master whiteboard template and we also trained a dedicated pool of passionate coaches.

The people behind the promptathon

A successful promptathon requires a dedicated team:

- Concept owners plan company-wide events, update templates, and create materials, such as challenges, sample prompts, and prompting basics.

- Hosts lead the sessions.

- Coaches monitor breakout rooms and provide expert support.

We offer two types of promptathon events:

- Open events welcome all employees. Held virtually twice a month, these sessions accommodate up to 150 participants and feature predefined challenges.

- Closed events are tailored for teams of 20 to 100 participants with challenges customized to their needs. These events can be virtual or onsite.

We’ve also developed a comprehensive onboarding process. For open events, participants are encouraged to watch a 60-minute video on prompt engineering basics. For closed events, we offer the option of a live pre-session or a self-paced video, depending on the team’s preference. The participants also receive prompting information prior to their promptathon session.

How a promptathon unfolds

Each promptathon session follows a similar format, varying only in duration and challenges. Key tools include Zoom or Microsoft Teams for breakout rooms, a whiteboard (like Mural or similar), and approved generative AI tools.

The host begins a session with a 15-minute introduction covering welcome, prompting tips, tools, and instructions. To reduce dropouts, we explicitly state that full attendance is required for the session duration. The coaches facilitate the breakout rooms of 3-4 participants, ensuring a mix of skills. Each coach is assigned 3-5 rooms, providing support, offering tips, and answering questions.

In breakout teams, participants select and work on a challenge, document their findings and their final prompts, and also any lessons learned in their workspace. At the end of the session, two to three teams can demonstrate their work if they wish. The host concludes the session by presenting sample prompts, evaluation prompts, summarizing the lessons learned (with AI assistance), and facilitating a Q&A round.

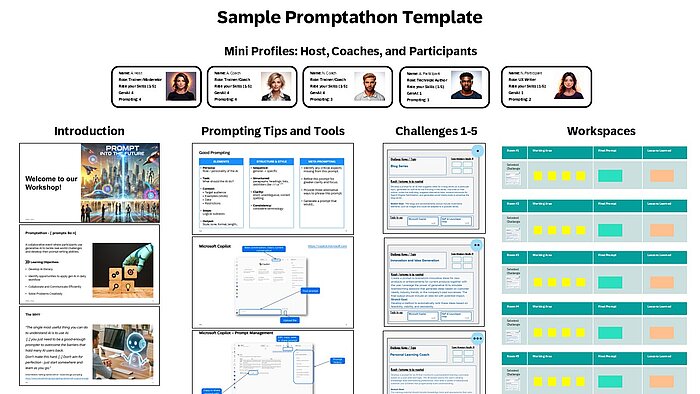

Our promptathon template (whiteboard)

The whiteboard – in our case Mural – serves as our primary collaborative workspace. Participants access the whiteboard before the session in order to complete "Mini Profiles".

During the welcome segment, the host employs MURAL's presentation mode to go through the objectives, as well as succinct prompting tips, generative AI tool details, and the five challenges categorized by difficulty, along with an additional task enabling participants to create and solve their own challenge. The host also explains how to use the designated work areas for each breakout group of three to four individuals, which is where they can select and paste a challenge, document their progress, and record final prompts and lessons learned.

What makes an effective promptathon challenge?

One of the key aspects of a promptathon are the challenges. From our experience running promptathons, we’ve found that an effective challenge is defined by six key criteria:

1. Practical and relevant: Challenges should reflect the day-to-day workflow of participants and be aligned with their usual tasks. This allows participants to immediately see the value of AI as a supportive tool.

2. Role- and skill-appropriate: Participants come with varied backgrounds and AI skills. A good challenge mix includes both entry-level tasks and more complex ones, so everyone can engage, learn, and contribute.

3. Clear and well-scoped: Challenges must be easy to understand and doable within the timeframe of the promptathon. A well-scoped challenge sets clear boundaries while leaving enough space for exploration.

4. Collaborative and interactive: Promptathons thrive on teamwork. Effective challenges encourage discussions, comparisons, shared learning, and collective problem-solving.

5. Engaging and explorative: A good challenge encourages curiosity, inviting participants to test AI limits, play with prompts, and discover how models respond.

6. Multimodal: While many challenges are text-based, they don’t have to be. Incorporating tasks that involve multimedia generation can increase variety and appeal.

Challenge building blocks

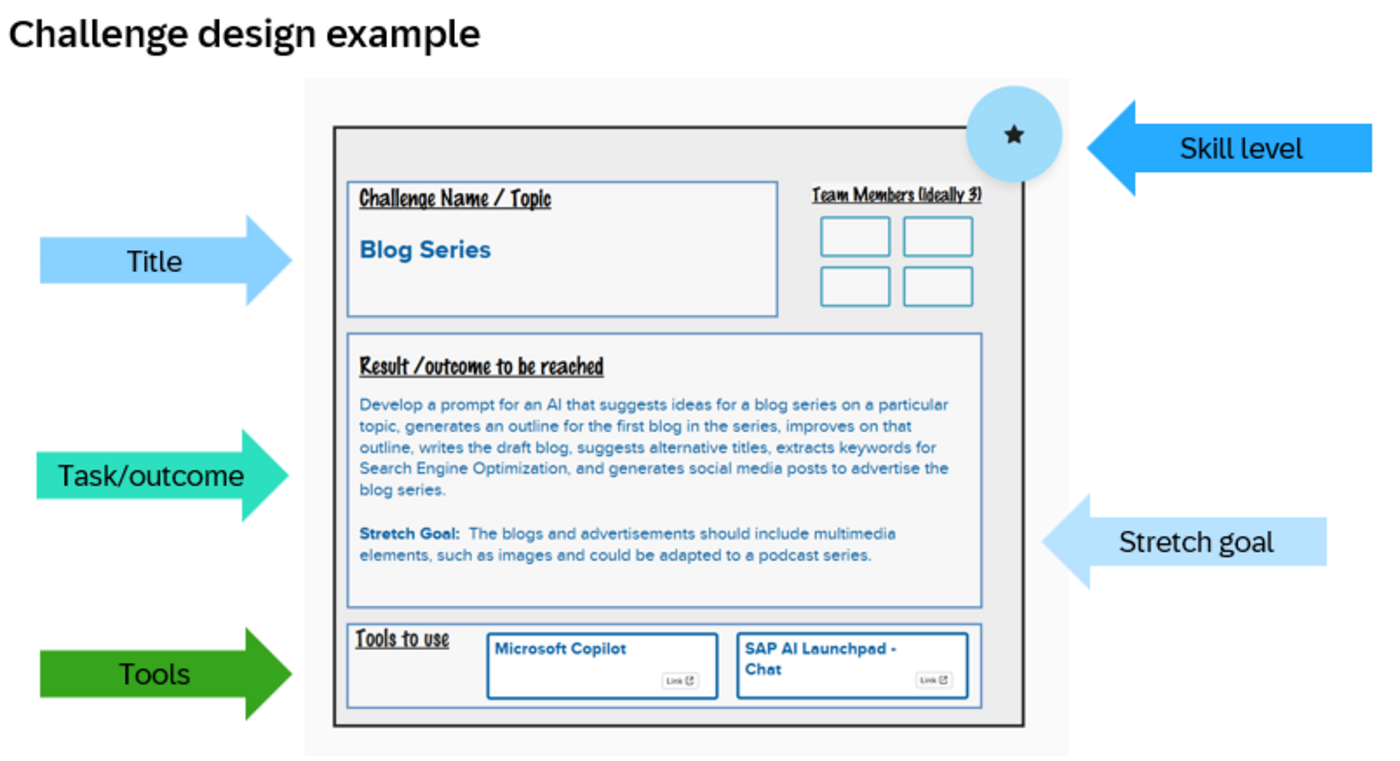

Each challenge is designed as a compact learning unit with a few essential components:

The challenge title should be clear and concise, immediately conveying the focus, relevance, and difficulty of the task. This helps participants quickly find challenges suited to their roles.

The description of the task should be specific enough to guide, yet flexible enough to allow experimentation. This balance encourages iteration without discouraging creativity.

A challenge should also define the outcome, setting expectations for what the AI output should look like. This gives participants a target to aim for and a basis to assess prompt quality.

A stretch goal deepens the task, such as tailoring the response to a new audience or adding interactivity. Since the stretch goal is optional, it motivates advanced participants without overwhelming beginners.

Each challenge should indicate a skill level, labeled beginner, intermediate, or advanced (for example, with a star system), so participants can choose based on their confidence and experience.

Additionally, we list recommended tools and models to reduce uncertainty and ease onboarding, especially for newcomers.

Audience-driven design: Finding the right use cases

Effective challenges start with knowing your audience: what they do, what they need, and where AI can help. Some participants want to build prompting skills, while others want to explore the potential of AI or understand its limits. Clarifying these goals ensures that challenges are purposeful and relevant.

From there, we identify common tasks where AI adds value. For technical communicators, this might mean editing, summarizing, or checking terminology. Each task is turned into a hands-on challenge to boost confidence and practical skills.

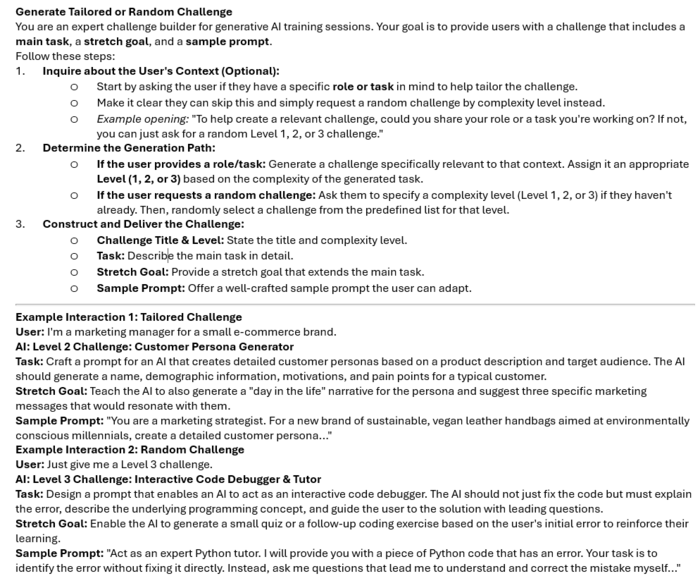

To speed up the process, we also use a Challenge Builder prompt that helps design new tasks and encourage teams to use it themselves during the event.

Maintaining engagement

To keep participants engaged, we incorporate a variety of activity types.

Reverse prompt engineering: Instead of writing prompts, participants are given model outputs like a paragraph or image and asked to reverse-engineer the prompt behind it. This deepens understanding of how phrasing, tone, and constraints shape results.

Prompt competitions: Teams tackle the same task independently, and then compare results. This highlights how varied prompting strategies such as changes in tone, detail, or order can lead to very different outcomes.

Creative constraints: Challenges sometimes include limits: a maximum word count, banning common words like create, or restrictions on prompt structure. These push participants to prompt with more creativity, clarity, and precision.

Sample prompts: When and why to share them

For each challenge, we prepare sample prompts as a reference. These are carefully developed through testing and refinement across different models like ChatGPT, Claude, and Mistral to ensure they produce accurate, consistent, and well-structured results. However, we don’t share these samples during the session. Instead, we encourage participants to explore independently. Without a ready-made solution, they’re more likely to experiment, reflect, and discover what works through trial and error.

At the end of the session, we share the sample prompts for comparison. Participants can see how their own approach differs, identify effective strategies, and learn that there’s rarely just one “right” prompt but just different ways to guide the model successfully.

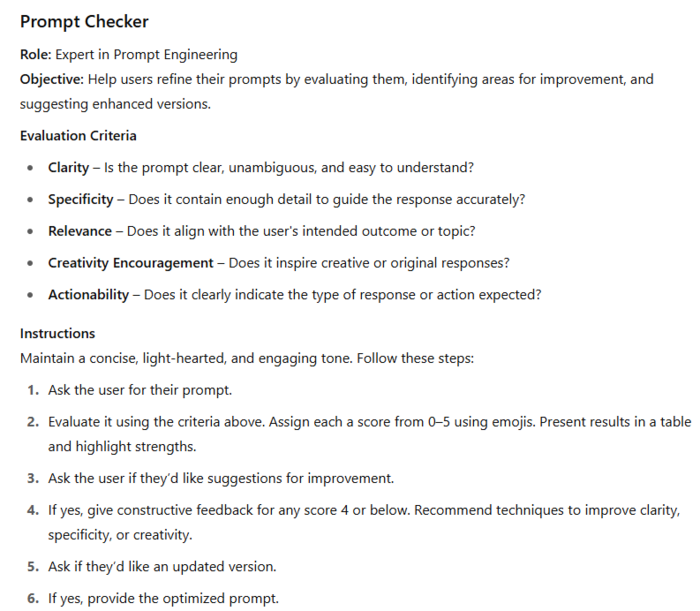

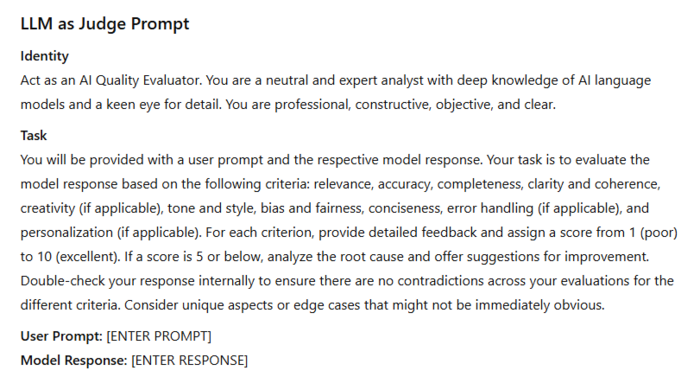

We also offer follow-up custom prompts like a Prompt Checker (to assess prompt clarity and structure) and LLM-as-Judge (to evaluate output quality). These prompts help deepen learning beyond the session.

Challenge levels: A structured approach

To make challenges accessible and meaningful for everyone, we organize them into three skill levels. This helps participants find tasks that match their experience while encouraging growth and collaboration.

Level 1 – Beginner (★): Ideal for new prompters, these simple, practical tasks build confidence with basic prompting. Examples include brainstorming ideas, summarizing short texts, or generating blog titles. These are useful, everyday scenarios that show AI’s value right away.

Level 2 – Intermediate (★★): These involve more complexity and creativity. Tasks may include improving outputs, comparing responses, or handling professional content like drafting LinkedIn bios, analyzing inclusive language, or mapping user journeys.

Level 3 – Advanced (★★★): Designed for experienced users, these tasks require structured prompting, multi-step flows, or refinement techniques. Examples range from designing coaching dialogues to identifying risks or building dynamic personas.

This tiered structure supports progressive learning: Beginners can build up gradually, while advanced users dive straight into complex problem-solving and share insights with others.

Feedback and takeaways

Feedback is central to how we improve our promptathons. Alongside live impressions, we collect structured post-event surveys covering challenge clarity, coaching quality, tool usability, and overall learning value. Participants often praise the hands-on collaborative format, the safe space for experimentation, and the structured setup with breakout room coaching and balanced interaction.

Suggestions for improvement include earlier access to sample prompts, more wrap-up structure, and better support for beginners. We’ve already addressed many of these by updating prep materials, refining team formation, and improving facilitator guidance. Each session helps us enhance the experience, building a stronger foundation for future learning.

Unlocking the prompting potential

Our journey with promptathons demonstrates their power as a catalyst for AI literacy and collaborative innovation. By providing a structured, hands-on, and engaging environment, we've enabled our peers to confidently apply generative AI in their daily work.

As generative AI continues to evolve, the ability to effectively communicate with these systems – the art of prompting – will become an increasingly vital skill. Promptathons offer a scalable and sustainable model for organizations of any size to foster this critical capability, empowering their workforce to embrace AI's potential rather than be overwhelmed by it. We believe that by investing in such collaborative learning experiences, companies can unlock new levels of efficiency, creativity, and problem-solving, truly shaping the future of work.